Abstract:

Personal privacy devices (PPDs) typically emit strong tones or swept narrowband signals to jam nearby GNSS receivers and deliberately cause loss of lock. Excision methods deployed on receivers mitigate such interferers by tracking their instantaneous frequency and removing those components in either the time domain (e.g., notch filtering) or a transform domain (e.g., Fourier-domain excision). For effective mitigation without degrading the GNSS signal, the excision location must be precise; misplacement can sometimes harm performance even more than leaving the interferer unmitigated. Trackers are therefore evaluated for both dynamic tracking performance, especially against fast-sweeping jammers, as well as steady-state estimation jitter, reflecting a fundamental tracking speed versus variance trade-off. We propose a new frequency tracking algorithm that provides a better trade-off than existing methods using first and second moments of the gradient for a complex first-order IIR notch filter under a standard output power minimization objective. We first describe the algorithm, which is inspired by the Adam optimization method widely used for optimizing neural network parameters, and its relation to existing adaptive notch filter (ANF) update rules. Next, we analyze performance over a wide range of simulated and recorded interference events, including publicly available datasets, and benchmark against state-of-the-art methods including frequency-locked-loop (FLL) based designs. Finally, we analyze resource utilization and latency, and quantify the effects of quantization and pipeline delays on the proposed method and on representative state-of-the-art baselines. Our proposed Adam-based method shows marginally higher resource usage than a vanilla ANF while providing better suppression under most scenarios.

Highlights:

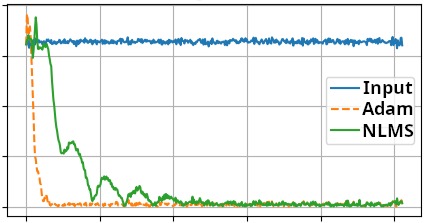

- Adam-ANF provides higher suppression against fast chirps compared to conventional NLMS-based ANF designs due to faster convergence.

- The tuning space of Adam-ANF hyperparameters are wider than NLMS-ANF, resulting in higher robustness against changing conditions.

- Adam-ANF efficiently supports the general class of Adaptive Neural Filters (ANeF), which are potential higher-dimensional parametrizations of the conventional ANFs.